5 Ways to Optimize Your Blog in Google Search Console

Published by Kenny Novak • Search Engine Optimization • Posted October 10, 2018 ContentPowered.com

ContentPowered.com

Google’s search console is a familiar sight to many marketers, particularly those in the habit of checking for search penalties due to various gray hat techniques, or simply the age of their sites. Formerly known as Google Webmaster Tools, the Search Console is a collection of tools and information that can help with a variety of optimizations. I’ve come up with the five best approaches to optimization using only the data the Search Console gives you.

About the New Search Console

In January of 2018, Google updated their Search Console. Those who have used it before are given the opportunity to migrate from the old console to the new. This is worth doing, even though you lose access to some data and reports; the new Search Console doesn’t have everything the old one had, but it also has new features and is more integrated with the rest of Google’s products.

For example, it was only in August that Google got around to adding the link reports from the old console to the new.

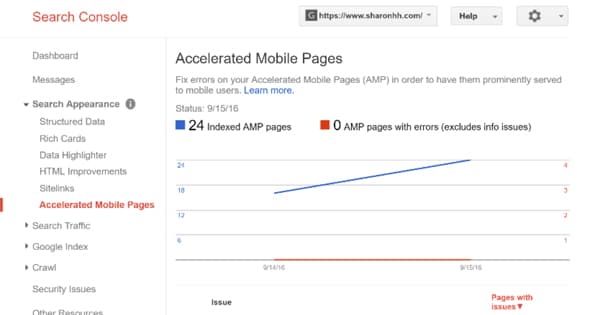

For now, the new Search Console has a number of useful reports. There’s an index coverage report, an AMP status report, a rich result status report, a performance report, and a sitemaps report. Additionally, there’s a URL inspection tool, a report about manual actions, a links report, and a mobile usability report. All of these are useful to some extent, though some of them aren’t useful to some people. For example, if you’re not using the AMP system, the AMP status report is meaningless to you.

1. Check For and Fix Manual Actions

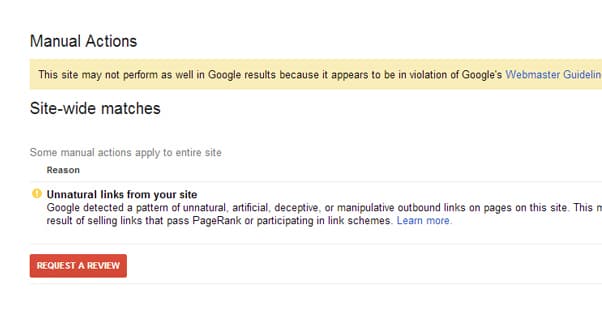

Manual Actions are Google penalties. Some things that we refer to as penalties, like “Panda penalties” are not actually penalties; they’re adjustments to the algorithm and corresponding drops in ranking. Manual actions are intentional depressions in ranking caused by specific issues that Google points out for you to fix.

If your site has any kind of manual action taken against it, that penalty will show up in this report. You should take steps to fix any manual action before even thinking about other site optimizations. Manual actions are holding you back far more than any other lack of optimization. To use a car analogy, it would be like focusing on exactly when, to the millisecond, you should shift gears to get the most power from your vehicle, while ignoring the trunk full of bricks. Remove the bricks and you’ll go faster, sooner.

There are a lot of different manual actions – enough to be covered in their own post, actually – so I’m only going to gloss over each of them. You can find a resource for fixing each issue if it’s one that affects you.

- Hacked Site. This action indicates that your site shows signs of being compromised, usually due to the installation of malware or other malicious code. Cloaked content can also trigger this penalty. Recovering from a compromised site is a monumental task, so make sure to go about it properly.

- User Spam. This action indicates that user-generated content, like web forum posts, guestbook entries, blog comments, and user profiles are filled with spam. Look at any location on your site users can control and remove offending entries.

- Spammy Free Host. If you’re hosting your website on a free web host, that host is probably adding ad banners or injecting code into your site. This looks awful and hurts your site. Move to a better host and request reconsideration.

- Spammy Structured Markup. This happens when markup code is used improperly, like tagging data users can’t see, tagging elements improperly, or being misleading. Fix your structured data and file that reconsideration request.

- Unnatural Links. There are two penalties here; one involving links TO your site, and the other involving links FROM your site. Avoid buying links, manipulating links, selling links, or otherwise running afoul of Penguin.

- Thin Content. Low quality, shallow pages are now an official manual action penalty. Thin affiliate pages, automatically generated content, stolen content, scraped content, doorway pages, and other such thin pages can all earn you a penalty. Figure out what Google is targeting and either fluff up the pages or remove them.

- Cloaking. Any time you show a user one URL destination and send them to a different page, with or without redirecting Google’s spiders to a different link, it can result in a penalty. Don’t try to mislead the bots or your users.

- Pure Spam. If your page is populated with crap, full of cloaked links, keyword stuffing, and other high-intensity spam techniques, Google will come down on it like a sack of bricks. If your site is this bad, it’s probably better to scrap it and start over.

- Cloaked Images. Showing one image to Google and another to your users is a violation of Google’s webmaster guidelines. Any hidden content of this sort is going to earn you a penalty. Remember, Google spot-checks their index, and their bots can see through simple redirects and cloaks.

- Hidden Content or Keyword Stuffing. Both of these are individual black hat techniques that haven’t worked since 1998, so if you’re still using them in 2018, it’s time to re-think your entire marketing strategy. If you’re getting this penalty and aren’t intentionally doing it, you’ll need to revamp the offending pages.

- AMP Content Mismatch. AMP versions of pages should be identical to non-AMP versions. If there’s a difference, correct them so they match and this penalty will go away.

- Sneaky Mobile Redirects. If you’re directing mobile users to a version of your page that Google can’t access, it’s a penalty. Generally a best-practices responsive design eliminates this possibility.

In all cases, you can see one of three results when you click on the action. You’ll see “All Pages”, a URL string that ends in a subfolder, or a specific page URL. This identifies the scope of where Google has seen the issue, and helps you identify where on your site the problem occurs. Use this to track down the problem and fix it.

Once you have fixed the issue, you can file a request for reconsideration. In the Search Console, find the “request review” button and file for reconsideration. Google will re-scrape your site and check for the issues they had previously identified. If those issues are resolved, they will remove the relevant penalty.

2. Solve Indexing Problems

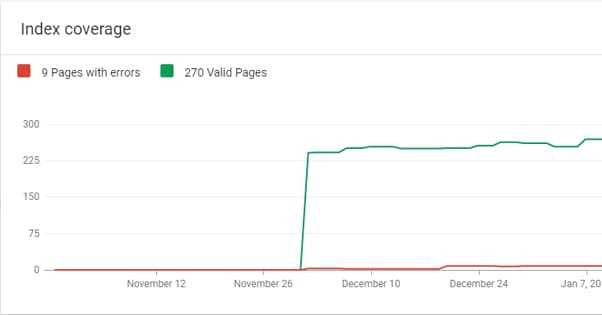

Under the Index Coverage Status Report, you’ll see some very useful information with regards to how much of your site Google can see. This will show you the number of pages on your site that Google indexes, as well as any indexing errors that come up.

You should look for a few possible problems. First of all, check to see if there are indexing errors, and more importantly, if there’s a spike in indexing errors.

Any indexing error is an error where a page should be indexed – Google knows it exists – but is prevented from being indexed, usually by a NoIndex or bot-blocking command. A spike in indexing errors indicates a change you made to your site that blocks pages, perhaps from editing a template file and breaking it. If a page should be indexed but isn’t, look for what is blocking Google from seeing the page and fix the problem. If a page is indexed but shouldn’t, you can block Google from seeing that page. Files like robots.txt or any page behind a login wall should be noindexed just in case.

If you know your site has 1,000 pages on it, but only 400 are indexed, you may have a subfolder or subdomain blocked. You should be able to identify what is being blocked by looking for what is indexed, and figuring out the shape of the holes.

Sometimes pages will be overlooked. Google discovers new pages through sitemaps, by following links, and other forms of notification. If Google hasn’t discovered certain pages, it’s a good idea to build a full sitemap and submit it to Google so they can find everything.

3. Confirm Mobile Usability

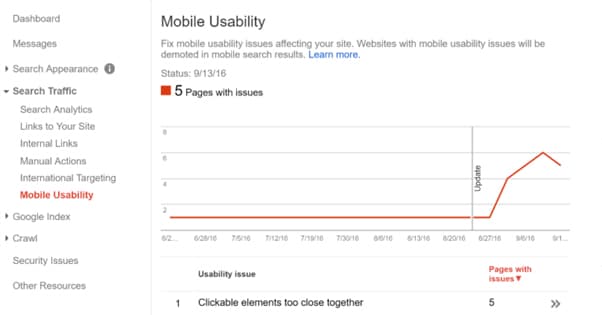

Google is putting more and more emphasis on mobile web browsing every year, including the recent mobile-first indexing change they rolled out this year. As such, the mobile usability report is crucial for modern optimization.

This report will show you every indexed page on your site and whether or not it has an error. Note that sometimes, pages affected by issues are not shown because they aren’t bad enough in comparison to worse pages on your site. Essentially, the worse a page looks in the report, the more it needs to be fixed immediately. Once you fix the worst issues, re-run the report and fix anything else that pops up. Here are the errors that can appear:

- Flash Usage. If your page is the one mobile users see but it uses Flash for media, you’ll see this error. Mobile devices have a hard time with Flash – and Flash is being deprecated soon anyway – so it’s time to move away from the buggy, exploitable plugin.

- Viewport Not Configured. Your page lacks a Meta Viewport tag that tells devices what size the page should render. This error can appear on perfectly functional adaptive or m. mobile sites, since it’s part of responsive design.

- Fixed-Width Viewport. Setting your mobile page size to a fixed size can be problematic due to the wide range of possible mobile devices with different size screens. Try to avoid fixed sizes whenever possible.

- Content Not Sized to Viewport. If your page needs horizontal scrolling on a mobile device, this error pops up. Try to avoid anything too wide for a screen.

- Small Font Size. Your font is too small to be easily read on a mobile device without zoom. You’ll want to make it larger for your mobile version.

- Touch Elements Too Close. Any situation where a user could want to tap on one link or element and accidentally “fat finger” another one is an error. Space things out more.

Sooner or later your mobile site will need to be more optimized. Might as well start now.

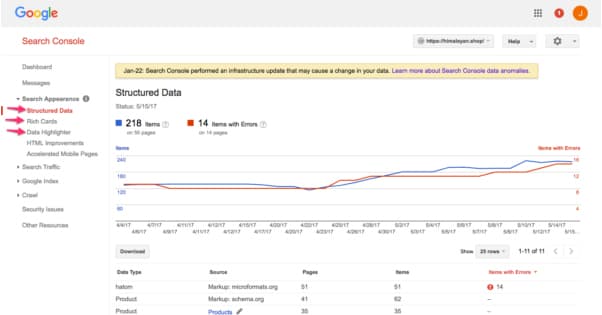

4. Check Rich Results

The Rich Result status report will give you a listing of any pages on your site with the right kind of structured data to fill out a rich result. For example, this search shows you a box up top with a basic hint of a recipe.

That’s a rich result. Rich results exist for job postings, recipes, and events, among other pages. You can see if there are rich results on your site, and if you think you should have some but they aren’t there, you can troubleshoot problems. This only applies to sites that have the right kind of structured data, so it’s not high on my list.

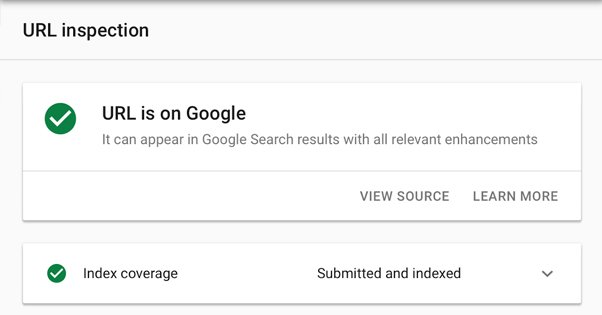

5. Use the URL Inspection Tool

The URL Inspection Tool is a tool provided in the Search Console that allows you to inspect a specific URL. You can inspect Google’s indexed version and see if it matches your live version, as well as see any specific errors or penalties relating to that specific page. You can also request that Google fetch a live URL and inspect whether or not it’s able to be indexed. Note that this does not automatically index a page; you can’t use this to get your site indexed faster or anything. There’s a specific “request indexing” feature you can use to put your page in Google’s queue.

When you inspect a URL, you can see whether or not the URL is part of the index, when it was last indexed, and if there are any errors relating to structured data, AMP, or other problems. Check the problem you see against Google’s reference table and explore how to fix any issues that crop up.